Opportunity Solution Trees

Mapping our options toward a desired outcome

Tying experiments to outcomes

In a prior article covering An Experiment Canvas, I talked briefly about how experiments tie to the outcomes we are trying to achieve and the problems we are trying to solve (Know the problem you are solving).

I actually introduced an Opportunity Solution Tree (OST) in that article, but did not explicitly call it out.

In this article, I want to go more in depth on Opportunity Solution Trees; what they are, how they are used, how to create one, and how I think about them slightly differently (but only slightly) than folks like Teresa Torres who really introduced them broadly to the Product community in her book “Continuous Discovery Habits”. This book, by the way, is a must have for anyone who works in software product development - not just folks in product-specific roles.

What is an Opportunity Solution Tree?

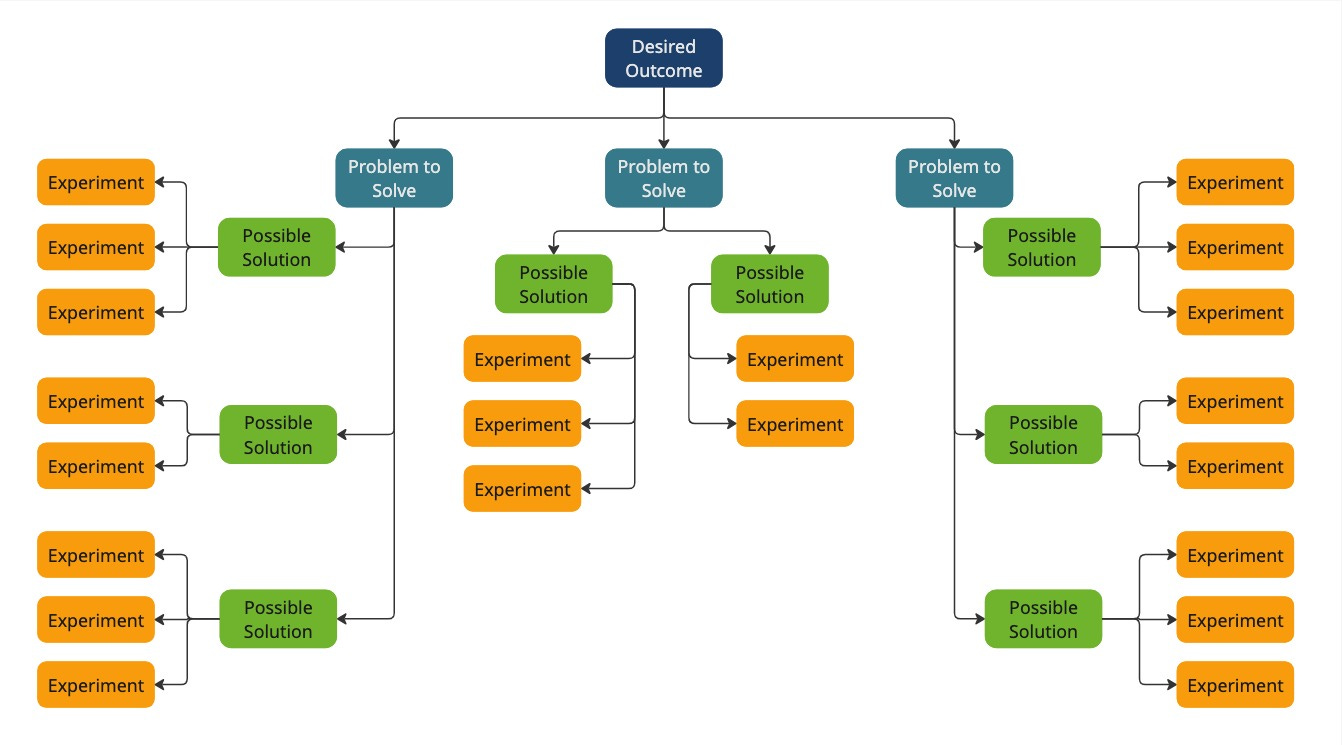

From my perspective, an Opportunity Solution Tree is a means of connecting the experiments we are running to the desired outcome we are pursuing. It is a tool that helps us to think broadly and deeply about the problem space and then make choices about where we are going to put our energy.

The Basic Structure

We start with a desired outcome.

We identify potential barriers to that outcome (problems).

We look at possible solutions to each problem.

Finally, we devise experiments we can run related to a possible solution.

Where my approach differs (and why)

You might notice that my structure is slightly different from the norm. Where I talk of “Problems to Solve”, a standard Opportunity Solution Tree speaks to “Opportunities”, and where I mention “Experiments”, a standard Opportunity Solution Tree mentions “Assumption Tests”

I think Experiments and Assumption Tests are pretty much the same. This is a matter of word choice and I choose Experiment because it has been a part of my vernacular for many years. I also have some tools and structures around experiments that I like to bring into organizations with me. It is easier to introduce them when there is alignment in vernacular.

Problems versus Opportunities

Now - I do want to address my choice of “Problems” versus “Opportunities” in more depth.

First of all, I have been saying the phrase “Know the problem you are solving” for years - well before I was first introduced to Opportunity Solution Trees. But there’s more to it than just a coined phrase.

Teresa Torres herself describes “Opportunities” as “[...] the customer needs, pain points, and desires that, if addressed, will drive your desired outcome.”

Those sound like problems to me.

In other articles, Teresa uses the terms Opportunity and Problem interchangeably.

This is not intended to be a critique of Teresa’s work. Far from it. I think her work is brilliant and I am grateful for her influence in this space. I am pointing this out only to highlight that “Problem” and “Opportunity” are nuances in this context - they are essentially the same concept articulated from differing perspectives.

And I choose the “Problem” perspective over the “Opportunity” perspective.

Take a look at these two phrases:

“Know the problem you are solving”

“Know the opportunity you are pursuing”

With a problem, we are solving it. We believe this to be a barrier and we are trying to reduce or eliminate the impact. There is a focus on risk and avoidance.

With an opportunity, we are pursuing it. We believe this to be desirable and we are trying to achieve it. There is a focus on benefit and attainment.

I want the benefit and attainment focus to be on the outcome, not on the steps to get there. Along the way, I want folks thinking conservatively and critically. And I am looking to create subtle contextual cues to help them; such as calling it a problem.

I find there is little more dangerous to an organization than an executive enamored with the potential benefit of an opportunity. The more things we frame as beneficial, the harder it is to give them up and move on to the next possible approach.

Alright - that’s enough of that. Whatever perspective you take, Opportunity Solution Trees are incredibly helpful. Let’s move on.

Creating an Opportunity Solution Tree

Involve the team

Before we get into the specific steps, I want to stress how important it is that this work not be done in a silo, regardless of how “efficient” you think it is to have one “expert” do the work. In any work where we need to have a shared mental model, high-bandwidth candid communication is the fastest and most effective path.

Opportunity Solution Trees are intended to be a group activity. At a minimum, the Product Owner, a Software Engineer, and a Designer should be present.

My favorite way to approach this is to have each participant create their own OST in private. I know, I just said not to work in a silo - stick with me. Then, everyone comes together and runs a 1-2-4-all or a Compare and Combine.

If you don’t have time for that, then I suggest the team come together and co-create one OST in real-time.

The worst approach is to have one member of the team create an OST and then get feedback from others.

No. Wait. The worst approach is to have one member of the team create an OST and then tell everyone they need to use it as is.

Start with a desired outcome

You need to have some notion of who your target audience is and the value proposition you intend to offer them. Within that context, there are impacts you want to have on the audience’s experience or business - these are your outcomes. Outcomes are often measurable and directional. They are impact oriented. For example, we might want to increase engagement with our content or decrease production lead time.

Pay attention here. Make sure you are really looking at outcomes and not outputs. Remember the desired impact.

For example, let’s use engagement. Let’s say I want to increase the engagement on my blog. It would be an easy mistake for me to determine that an increase in engagement would come from an increase in content and set an “outcome” of 20% increase in published articles per month. But more articles does not ensure engagement. Rather than measuring the number of articles, I need to be looking at engagement with the articles - my outcomes are more likely that people are commenting, readers explicitly like an article, the articles are being syndicated, and the articles are being referenced.

Output

Outcomes

Articles published per month

Readers are commenting

Readers are liking

Articles are syndicated

Articles are being referenced

Identify potential barriers to that outcome

Let’s say we decided on an increase in customer interaction as a combination of comments and likes. We now have our desired outcome.

Next is brainstorming the potential barriers to that outcome.

Here again, I suggest the 1-2-4-all or Compare and Combine. You can give each participant some time to come up with their own ideas prior to the meeting so long as you are confident everyone will show up to the session with their pre-work completed. Otherwise, set aside as little as 5 minutes in the session and give everyone a chance to generate some ideas.

I would say you are looking for a minimum of 3 unique problems. You may find that you come up with many more. That’s fine. It is usually an indication that you need to do additional customer research to better understand their most prevalent pain-points, needs, and desires. Capture whatever you come up with and allow the process of continuous discovery and refinement to better inform what needs to be addressed sooner rather than later and what can be dropped entirely.

Take a Break?

Whew. That was a lot of work. You have probably spent a couple of hours working individually and another couple of hours as a team to get this far. Now is a good time to take a step away for several hours. Give everyone time to sit with the ideas and get acclimated. Give everyone time to rest.

Look at possible solutions to each problem

Narrow the problems, if needed

If you have three or four identified problems, generating possible solutions for all of them is feasible. If you have identified five or more problems, I suggest you go through a quick ranking exercise to select the two to four you are going to focus on trying to solve first.

There are a number of techniques you can use to narrow the set. A couple of quick and easy ones are:

Dot voting

Have the team members vote on which problems they think are the most important.

Give each team member 3 votes (using stickers or marks).

Allow them to distribute their votes across the proposed problems, either all on one problem or spread across several.

Tally the votes and focus on the top 3 voted problems.

Weighted MoSCoW Prioritization

Categorize each problem based on four levels of priority: Must have, Should have, Could have, and Won’t have (for now).

Working individually, have each member of the team label each problem as a "Must," "Should," "Could," or "Won’t."

For each label on a problem increment the score it as follows:

Must - 4

Should - 3

Could - 1

Won’t - 0

For example, if a problem is given 2 must, 1 should, and 2 could, the total score for that problem would be (2*4)+(1*3)+(2*1) = 13

Select the two to four highest scoring problems

Generate Possible Solutions

We are going to return to our 1-2-4-all or Compare and Combine techniques here.

In this instance, we are generating possible solutions to the identified problems to solve.

Keep in mind that solutions can be the creation of, modification of, or removal of a product, feature, service, workflow, process, documentation, or anything else that we offer to customers.

Make an effort to keep solutions relatively small. Smaller solutions allow us to create simple things in small steps, release ridiculously often, validate before, during, and after.

Make an effort to focus only on the problem at hand. If we are trying to solve two or more of the problems simultaneously, our ability to run experiments and measure for results is going to get far more complex and confusing.

Narrow the possible solutions, if needed

For each problem, if there is only one or two solutions, coming up with potential experiments is feasible, but if you have four or more possible solutions to a problem, you might want to narrow the set you want to focus on first.

Dot Voting can work here, whereas MoSCoW is probably less applicable. A couple more techniques you could use are:

Impact versus Effort

Select the possible solutions that represent the higher impact with lower effort

Draw a 2x2 matrix where the x-axis represents "Effort" (low to high) and the y-axis represents "Impact" (low to high).

Evaluate each solution based on how much effort it will take to implement and the potential positive impact it will have on the problem.

Focus on solutions that fall into the high-impact, low-effort quadrant as your priority solutions.

Choose the top 1-2 solutions from this quadrant.

Multi-Criteria Decision Analysis (MCDA)

Use a formal decision-making process that takes into account multiple criteria, both qualitative and quantitative.

This is similar to the Weighted MoSCoW Prioritization, except the team chooses the categories and weighting.

Identify the most important criteria for choosing a solution (e.g., customer satisfaction, cost, time to market).

Keep this to no more than the four most important criteria

Assign weights to each criterion based on importance.

Use integers

No two criteria should have the same weighting

Evaluate and score each solution based on the criteria.

Do this individually, so that each possible solution has multiple scores per criteria

Sum the weighted scores and choose the solutions with the highest total.

Take a Break?

Again - that was a lot of work. Now that you have narrowed the list of possible solutions, give people time to think about them. You’re going to come up with possible experiments for each possible solution next. It will help if people have had time to process the progress so far and think a bit about what some experiments might look like.

Devise experiments we can run related to a possible solution

Here, we are trying to make informed decisions about how we are actually progressing toward our desired outcome. For the sake of this article, I am going to assume that the desired outcome has been verified. So, at this point, we’ve identified a potential problem which we believe is a barrier to our desired outcome.

Take a look at my previous article on an Experimentation Canvas for more on how I structure experiments.

Is this a real problem?

Is this a newly identified potential problem or has this been known for a while? Has the organization run any experiments that validate the potential problem? These experiments could be very simple - such as examining user data to check a hypothesis, more involved - surveys and polls, or quite involved - A/B Testing. If the potential problem hasn’t yet been sufficiently validated, start your experiments there. No sense solving a problem that doesn’t actually exist.

By the way - someone in authority says so, is NOT sufficient validation. Do they have the data? Have you seen the data? Do you agree with their conclusions?

Small steps toward a possible solution

If we can empirically verify the problem, then we move on to experiments intended to test our possible solutions.

Look for small steps that can inform us along the way. Remember - Small steps allow us to create simple things in small steps, release ridiculously often, validate before, during, and after. Small steps allow us to get clear signal, learn faster, adjust sooner, and produce better results.

Small steps might include using prototypes to get feedback or creating a first simple increment.

What do I mean by a first simple increment? Let’s go back to our earlier outcome of increasing user engagement on my blog. We believe one of the problems is that users have to weed through content that is not relevant to their interests in order to find content they want. We’ve validated this problem via user surveys and analysis of user activity data. And we’ve decided that a potential solution is to provide personalized article recommendations based on a user’s interests, experience level, and preferred article length.

This is actually quite involved. Just the fact that there are three criteria for personalized article recommendations makes it somewhat complicated. Then add in that we may not have a clear idea of what represents the user's interests - is it a flat list of topics, a hierarchy of subjects, or a web of concepts? On top of which, we need to remember that this is a hypothesis.

Here is one way I might break this down into small increments:

Start with tagging

First release - Create article tags, show tags in article header, and allow users to click any tag and see all articles with that tag

Monitor for use

Are people using this to find articles?

Are they engaging more with articles they found through tags?

Are they engaging more with articles that have certain tags?

What tags are performing best?

Are there tags that seem correlated?

Second possible release - Allow users to favorite a tag and highlight their favorited tags in articles

Monitor for use

Are people favoriting tags?

Are people engaging more or less with tags?

Are they engaging more or less with articles they found through tags?

Are they engaging more or less with articles that have favorited tags?

What tags are being favorited the most?

Are there tags that seem correlated?

Third possible release - Allow users to update tag favorites in their profile

Monitor for use

Are people updating tags via their profile?

Are people updating tags more via articles or profiles?

Are people engaging more or less with tags?

Are they engaging more or less with articles they found through tags?

Are they engaging more or less with articles that have favorited tags?

What tags are being favorited the most?

Are there tags that seem correlated?

Fourth possible release - Put articles in three buckets (quick read, insights, deep dive) based on estimated read time, show “Article Length” in article header, and allow users to click on length and see all articles with that length

Monitor for use

Fifth possible release - Add Preferred Article Length to user profiles

Monitor for use

This is but one way this could be broken down. You might think I am spot on breaking it down like this. You might think I missed the point and approach it all wrong. Truth is, it is probably neither of these extremes and is somewhere in the middle. It is okay to get it “wrong” - working small allows you to learn quickly and adjust.

No matter how you approach the initial break-down, I would typically represent this all in a user story map so that everyone can clearly see the work we are planning to do next and how it ties to the overall user experience. Be prepared for it all to change after you make first contact with the user. Given this, you might as well make contact early and often to reduce the pain of the change.